"Earth as a Simulation Series 2: Are we simulated copies of people? How, slowing down technological development in your simulation will get around the potential recursive building sims in a sim glitch problems. However, an accurately simulated population will STILL present specific experiences, despite that the technologies these experiences depend on DON'T YET exist (immersive VR experiences for example). This series presents evidence of anomalous 'missing technology' experiences & evidence of obscuration of these & evidence that the simulation we are in was built in the last few decades."

Main Page Headings List

- How Likely is it that Simulation of an Entire World would use Caching, Buffering and other Processing Overhead Reducing Techniques?

- Is there any scientific research offering evidence that this buffering could actually be happening?

- 'IF' a Simulation of an Entire World DID use Caching, Buffering and other Processing Overhead Reducing Techniques then would there be Visible Evidence of this being done?

- In an earth simulation project is it possible that implementing 'rational' processing overhead reduction strategies would result in observable odd behavioral side effects?

- Is there any evidence that this is how our unbelievably, STILL ASSUMED TO BE A REAL reality REALLY functions?

- How much Visible Evidence is there that Earth Simulation Software is Pre-Generating, Caching and Buffering Rendered Frames?

If our hypothetical earth simulation project does work in a similar way such that it uses similar techniques and strategies that we already use now to reduce processing cycles and to save on processing intensive 3D frame rendering computations (which you’d expect because it would be the outcome of what we are using now) then might there be observable side effects of it doing this that are detectable by ourselves?

How Likely is it that Simulation of an Entire World would use Caching, Buffering and other Processing Overhead Reducing Techniques?

It shouldn’t take much to extend your thinking beyond what was presented on the previous page to realize that our simulation software simple absolutely HAS to keep track of and to calculate and define all interactions in moment by moment detail of absolutely everything here IN ADVANCE OF ABSOLUTELY ANYTHING HAPPENING. In other words, because it would be simulating copied people ACCURATELY it would be forced to accurately calculate everything well BEFORE ANYTHING VISIBLE ACTUALLY HAPPENS HERE.

In having to have everything pre-calculated then it is seriously likely to use a whole range of processing and complex calculation reduction strategies. If this ‘IS’ what it does then it is actually highly likely that the software will be pre-generating a buffer (a cache) of already defined and pre-rendered frames of each simulated person and their viewable environment.

Depending on the ‘state’ of people and their immediate future circumstances this pre-buffering might actually hold many seconds of pre defined ‘ready to render’ frames.

On the previous page I also made it clear that it is very likely in situations where people are attracted to each other that the simulation is pre-defining these peoples feelings and emotional reactions when they are SIMULATED as being with each other.

In these circumstances, ‘IF’ the simulation is pre-rendering frames AND these frames include pre-defined emotional responses then is it possible that we might be able to detect and measure any bleed through of the pre-rendered frames holding the future emotional responses before the emotional responses themselves are actually experienced?

It would be easy to monitor people to see if you could measure very subtle body changes to try and detect if people at least sub-consciously could show that ‘something’ of them is aware of a future emotional reaction of a particular type?

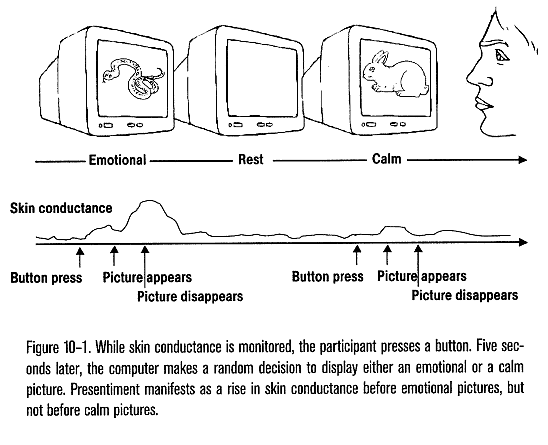

For example to do this you could set up a computer to present different images that different people would likely respond to by having specific emotional reactions. You could then have someone press a button that would initiate the computer to display one of these randomly generated images a few seconds after the button is pressed.

In other words if someone was going to be confronted by a certain event or presentation and their reaction to this event was pre-rendered and pre-buffered by the simulation then is it possible to pick up subtle effects in these people that could be construed as being due to commonly used data buffering techniques.

If we could measure such effects such that these measurements might even be accurate enough to allow us to predict peoples emotional reactions well before specific pre-defined emotional events actually happen then would this research be recognized as evidence that our reality appears to be making use of commonly used processing overhead reduction strategies?

Is there any scientific research offering evidence that this buffering could actually be happening?

Well, actually there is . . .

This is presented in Dean Radin’s book ‘Entangled Minds’ in Chapter 10 on page 161 entitled ‘Presentiment’.

Dean conducted 4 experiments measuring skin conductance to see if you could predict events before they occurred.

Participants had to press a button to initiate the random presentation of pictures that were either ’emotional’ or ‘calm’ after a 6 second delay the picture was presented. These studies showed a very significant statistical difference between the skin conductance measured before emotional pictures were presented as opposed to calm pictures.

The combined odds against chance of the 4 separate experiments conducted are 125000 to 1. The greater the emotional response the greater the skin conductance effect. The odds against chance of this correlation were 250 to 1.

Is this really evidence of ‘presentiment’ or is it possible that this is evidence of hypothetical earth simulation software buffering future reality frames for each individual?

I was in two minds over which would be more likely until I read that someone had detected the same predictable response to a future event happening when using earth worms. Which with PEOPLE watching them would have to be properly visibly rendered particularly if they were part of an experiment. Wouldn’t they?

I’ve been talking extensively with someone that in the past was a consultant advisor to people and groups putting together computer based simulations (in other words I’ve been doing my homework).

‘IF’ a Simulation of an Entire World DID use Caching, Buffering and other Processing Overhead Reducing Techniques then would there be Visible Evidence of this being done?

One of the things that would be done in a simulation of this complexity would be that it would ONLY be your focused attention point that would hold the attention of the simulation software. The further away ANYTHING would be from your focused engaged attention point the more and more fuzzy will be the perceptual rendering of ‘whatever’.

This is how you’d ‘render’ all perceptual and sensory information BECAUSE it keeps the potentially massive data processing and calculation overheads to a minimum.

Coincidentally, you might have noticed as you have been reading this that you have a highly detailed central area ‘spot’ with a fuzzy ‘periphery’ for EVERYTHING you look at. This is exactly, what you would expect if you needed to save on the processing required to render a fully detailed visual presentation. Coincidentally, we also have the ‘blurring’ of fine detail as your eyes and hence your attention shifts from one area to another which requires that you have to take a moment to ‘refocus’ on your new attention ‘spot’.

Coincidentally, this also means that the scale of information that you have immediate access to particularly for cross referencing and ‘joining important dots’ is also severely reduced. I say this because you have to keep moving your eyes and or the viewed material around to ‘connect’ to possibly connected information as well as of making it more difficult to ‘re-connect’ later to any connected information you are rendered as still being able to remember. If I was designing this simulation then the reduced vision spot scale handicap is something I’d very much be tempted to do to. The last thing you’d want is to have your managed people having easy visual access to a large viewing field particularly of any ‘worrying’ material ALL AT ONCE.

Let me give you another example of how our ‘attention engagement’ engagement could determine rendering resolution . . .

When you first wake up in the morning AND your attention is still orientated to the ‘inside your head’ space then as you ‘groggily’ look around WITH YOUR ATTENTION STILL PRETTY MUCH IN YOUR HEAD then everything you see will ALL BE RENDERED FUZZILY. The lack of obvious detail (reduced resolution rendering uses up way less processing capacity) can have your groggy head filling in the blanks all on its own which can (for example) have you temporarily convinced that someone is standing in the corner of your room.

It’s is ONLY when you CONSCIOUSLY decide to engage your attention on the external environment that the ‘detail’ improves that you realize that the shadow person you were sure was standing in the corner of the room was actually made up of a mish mash of different and poorly rendered things THAT ARE ALWAYS THERE.

‘IF’ we are in a simulation then rooms you regularly use will be cached in different resolutions. When you first wake up and start looking around the software will automatically use a low resolution locally cached version UNTIL you consciously engage your attention on the room at which point it will render (or pull in from local memory) a more detailed version of the room.

It should be obvious that for a simulation software system that has to absolutely pre-calculate everything for everybody well in advance then it will also ABSOLUTELY KNOW in advance when you will apply more ENGAGED ATTENTION to any area of your surroundings. It will then absolutely KNOW many quantum clock ticks IN ADVANCE which resolution it must use for each area of each 3D perceptual frame it renders to prevent anyone becoming suspicious.

To save on processing then it is also likely that rooms you spend a lot of time in will very likely even cache your own memory and habits associated with that room. You will then do things automatically like open the cutlery drawer without looking at it or even apparently thinking about it when you are wanting to grab a spoon to stir your morning coffee with.

In an earth simulation project is it possible that implementing ‘rational’ processing overhead reduction strategies would result in observable odd behavioral side effects?

For example ‘IF’ you THINK of something in a specific room AND that memory is wrongly automatically associated with that room then you may find yourself forgetting that memory as you move to another room that DOESN’T have that information tagged to itself. Going back to the room where you originally had that memory would then very likely result in that memory being re-loaded when you enter the original room environment and unbelievably you’d find that what you couldn’t remember elsewhere you now can.

Saved habitual behaviours for a specific room will mean that (for example) ‘IF’ you change some aspect of a well used room then it’s likely that you’ll find yourself automatically opening the wrong drawer as you reach for a spoon AND that it will take ages for you to adjust to any new changes in a previously well used room.

The ‘oddities’ that I describe above have actually been noted by researchers whom strangely never mention ‘simulation processing overhead artefacts’ as a possible explanation for these oddities.

What ELSE would perhaps be an ‘obvious’ outcome of the simulation WAITING for you to put your engaged, focused attention on something before you ‘render’ it?

Lets have a THINK . . . . well, in an entirely made up fake reality the more detail you zoom in on and focus upon then the more OBSERVABLY obvious the . . .

“Don’t do anything until the viewer applies direct conscious attention to ‘whatever’ they are looking at” effect will become.

The smaller the scale of consciously observed investigation (engaged attention) the greater the likelihood that this bizarreness will become more and more noticeable.

In other words, the smaller the detail an observer puts their focused, engaged attention on then the more obvious it will be that this ‘wait for the observer to CONSCIOUSLY OBSERVE before you do anything’ easily deducible computer processing saving strategy will become OBSERVABLY noticeable.

‘IF’ we are in a simulation and EVERYTHING is PERCEPTUAL then the end result of zooming in and trying to consciously engage your attention on finer and finer details of the ‘made up, pretend assumed to be fundamental particles of reality’ will have the simulated as conscious, attention engaged viewer getting to a point where it will become obvious to even simulated as professionally trained observers that the ACTUAL rendering of the fundamental perceptual unit changes are not actually initiated until a viewers engaged attention is consciously applied to view them.

Is there any evidence that this is how our unbelievably, STILL ASSUMED TO BE A REAL reality REALLY functions?

With something this stupidly obvious then as a simulation designer what strategies would you use to prevent your simulated researchers from REALLY realizing the significance of this?

‘IF’ you reading this ARE an academic and or scientist then ‘IF’ you were our hypothetical simulations designer then how would you MANAGE YOURSELF as you read these pages? As a simulation designer what awareness and cognitive management strategies would you implement to make sure you yourself would dismiss what are my very rational and very reasoned and logical presentations here? What individual management strategies would you use to make sure that an academic or scientist reading this wouldn’t be able to think well enough to objectively and or IMPARTIALLY evaluate EVERYTHING presented on these pages?

I have read some papers where some researchers HAVE ACTUALLY managed to suggest ‘artificial reality’ for the fundamental particle quirkiness BUT once again no one takes much notice AND of course no one has actually seriously looked for MACRO SCALE evidence that we are in some sort of artificial reality never mind that no one has even been allowed to engage any extended APPLIED THINKING ATTENTION such that no one has apparently managed to figure out any of the easily deduced observable and impossible to hide differences that would be EXPECTED ‘IF’ we are in a simulated reality that is a PROJECT. Confirmation bias being the most obvious.

How much Visible Evidence is there that Earth Simulation Software is Pre-Generating, Caching and Buffering Rendered Frames?

It is a FACT that there are loads of physically observable effects which you’d EXPECT if various buffering and memory caching and processing reduction techniques were being applied to out reality here . . .

As one of the rare COMPETENT, likely designated as an OFFICIAL researcher by the simulation whom is ‘worryingly’ seriously trying to get a handle on some of the bizarreness’s we have presented with here, then is Dean Radin subjected to harassments by our very own easily observed Agents Smiths whom are of course another easily deduced as being a seriously likely possibility in a simulation and particularly one that is specifically attempting to get away with rendering self aware, supposedly free thinking people?

Strangely, the harassers do make a big effort to hound Dean which of course is a good indication that he’s taking worryingly decent research lines (Dean Radin’s blog is here).

This page and the last were a strategic diversion, I’d be embarrassed if I thought that anyone would imagine that my THINKING efforts and scale of THINKING on these subjects were all confined to just one little cubby hole topic area . . .

The next page will revert to discussing realistic slow down technological advances possibilities as well as of presenting an abundance of evidence that this strategy is also operational . . .

Click the right >> link below for the next page in this series . .

December 8, 2014 @ 2:14 am

I think I have encountered a specific example of the above, happening right after I first finished this article.

As I was walking down the street, in a rainy day, I found my gaze focused on the hood of a walkway of an apartment building. I recognized that the water dripping from the side of the hood that was closer to me, and those from the other side, moved at different paces; the droplets at the back were ”choppy” in presentation, falling noticeably slower than those at front, with no obvious logical explanation for this. It was as if there were less Frames loaded for the droplets in the far side due to the presentation of the droplets.

Walking close enough to be a couple feet away from the far side of the hood caused the droplets to descend smooth and thorough, like those from the close side. Whilst there may be some logical explanation (to dissuade contemplation,) for this, I am betting that this is exactly what you meant about more detail being added upon zooming into specific detail.

December 11, 2014 @ 11:21 am

I have experienced something along the lines of confusion in establishing my whereabouts ie what room I am waking up in.

After doing many years of moving around living in other peoples homes temporarily as I have looked after them as clients. I went through a particular period of intense confusion each morning as I would wake (eyes still closed) trying to figure out which house I was in what bedroom how it was laid out, where the loo was etc etc. This would also hapen if I woke during the night needing the loo.

This could have been to do with me not following my life script and the rendering of locations I was in being problematic for a time.

I still occasionally get this, I have been in the same job location for nearly three years, but sometimes have a problem about whether I am at my home or work.

January 16, 2015 @ 11:01 pm

Haha this test comes to mind – count how many times the basketball is passed.

https://m.youtube.com/watch?v=oSQJP40PcGI

January 24, 2015 @ 12:30 pm

Reading this article inspired me to spontaneously use the corner of my eye to view the periphery. Just as you say, the details away from the center of the computer screen were blurry. I deliberately tried to shift my gaze VERY slowly towards the desk calendar to the far left of the screen. As I did, my eyes automatically shifted its center to the speaker right beside the screen. I tried using the soft focus technique where I deliberately chose to relax my eyes enough so I could see all items on the desk simultaneously without really focusing on any single item in particular. But it was very hard to use the technique. My eyes automatically oriented towards centering on the items closest to my vision or to the items that are really large, like the printer. Several times my eyes blinked, which was enough for me to revert to the usual default awareness where I only cared what was in front of me without really thinking about the blur or fuzziness of details of the rest of the items on my desk.

——–

As I am typing this comment, it feels very jarring to know that simulated humans’ sight is limited compared to the sharper visions of other simulated creatures, like the ones featured on this article: http://listverse.com/2010/12/12/10-animals-with-incredible-eyes/

August 14, 2015 @ 8:33 pm

I have experienced the same confusion upon waking up in my bedroom. This is getting worse and happens on a regular basis over the last 2 years. I often don’t know if it is night or day , what day of the week it is, do I have to go to work , where do I work, how do I read this clock, what do those numbers mean? . Some days were really bad and I lucidly woke up and was frozen. I had to tell myself I need to figure out where I am. This looks like a bedroom- I think. Why am I flat on my back? I had no idea who or where I was- just knew to expect this to take a while. It took minutes to come back. Now I expect it and go thru this routine trying to ” power on ” each day. I really wonder if the system is a bit over stressed or not managed properly?

October 25, 2015 @ 8:32 am

I have experienced several events to confirm what you are saying in these series. Each time I got high pitched buzzing in both ears but mostly in the right ear (incidentally this goes on all the time but on a quieter level!) and then all sound / noise stopped before I saw images. These were not in my mind as I was seeing them with my physical eyes. For example, at one time I was in a McDonald’s restaurant when, for what seemed like 10 minutes, the scene changed. The people I was with were no longer there, a different family was sitting at the table opposite us and somehow from the decor and clothes of the people in this new scene, it was taking place about a decade earlier. When I came back to the current timeframe, I asked my partner if he had noticed anything different about the place or myself; he hadn’t. He hadn’t even noticed that I’d blanked out for a while!

Three other times when all sound around me vanished, I experienced the most awesome, vibrant colour display in front of my eyes. It was like rainbow coloured fireworks moving gracefully to electronic buzzing sound. When this finished I felt such a deep sense of peace and oneness and put it down to a spiritual experience. Again this was not in my mind but right in front of my eyes.

Afterwards I Google searched this phenomena to see what could have caused it, other than something mystical and retina tearing and detachment were given as possible causes. I got my eyes tested and there was nothing wrong at all other than my usual long sightedness!

Another time I bought a book, read it from cover to cover and when I’d finished, I passed it to my partner as it was truly an inspirational masterpiece. When he started reading it and was about halfway, he got quite upset and asked how I could have bought and read a book with 7 pages missing out of it (the 7 missing pages were there, but they were just completely blank with no print whatsoever and I didn’t see them the first time round!). I didn’t believe him, because I had read every page in that book. When he handed me the book to see for myself, there were 7 blank pages missing. I could have sworn they were there the first time I read that book. When I told a friend of this experience, she was quite convinced that the ascended masters had filled in those pages for me whilst I was reading them – that was the only explanation we could think of!

IF we are truly living in a simulation, do we then resign to whatever we have been dished out with, because there is no way we can recreate anything? How do we change our circumstances if we know that we are capable of better? How does this doom and gloom theory tie in with your E-book which I have just started reading and at quick glance already see so much hope in the chapter titles? I think I’m confused here, please kindly spare a moment for a helpful reply. Thank you.

Other times w

October 25, 2015 @ 12:47 pm

Well Sarah . . .

The McDonalds restaurant replay is easily explained. ‘IF’ you’ve a visual scene/memory implant which saves all you see then if you are in a restaurant that the person you are simulating REMEMBERS eating in many years earlier then it is very likely that they would play back the recording of the original time to see how much it had changed. You ‘here’ just get the bizarre play back with no context – due to sim obscuration tactics making it as hard as possible to figure anything out.

There will be some ‘memory’ pages appearing in the next week or so which will cover our ‘faulty’ memory recall problems very comprehensively.

Rainbow firework / psychedelic experience is very likely a ‘spiritual / imaginative’ visual implant – I’ll be writing about these in a few weeks time.

The blank pages . . . you are simulating someone that read this book with all pages intact, the person your partner is simulating bought and read the same book BUT his version had 7 blank pages. Again in the sim trying to keep the fact we’ve not really got any freewill by giving increased flexibility means that many ‘unimportant’ circumstances will be significantly different to how they were originally and this missing pages is exactly the sort of error this ‘freewill flexibility’ will result in. Basically you bought this book thought it was great and recommended it to your partner who because you were NOT both with each other at that specific time bought his own copy. His copy had blank pages. So, with giving us pretend freewill you end up with your partner as you are reading the book, so he is passed and reads your version BUT as the missing pages event was significant / shocking the sim has to blank out those pages. Heads you get one set of our of context problems tails you get another.

I’ve answered the last bit all over the place – if your understandings of reality are a fantasy then you’ve no basis to understand anything. It’s only when you’ve some idea of what you are dealing with that you actually have a chance of actually figuring out how to DEAL WITH ‘ANYTHING’ PROPERLY.

The last page that I put up – did you read this? The ‘exercise’ page? What does this imply?

Well, it implies that I’m ‘hacking’ the sim, which of course is impossible. Except it’s not, it’s actually ‘OBVIOUS’ except of course no one here can figure out even the simplest things even with pages and pages of sim evidence examples . . . can they?

So, . . . anyone with any thinking capacity should be able to figure out what that ‘exercise’ page actually implies? What that page actually implies is that I’m very likely simulating someone that was/is HACKING THE SIMULATION . . . which if I am then what would ‘could’ that possibility lead to?

Well it SHOULD lead you to the thought that if YOU were personally hacking a simulation that YOU knew had YOURSELF living within it as a simulated person then what would you do?

Well, what I’d do is I’d team up with the version of myself in the sim as part of my extensive research efforts to understand it!!! That’s what I’d personally do!!!

AND as this actually ‘IS’ what I’d personally DO . . . AND as I get my ideas and understandings from the person I’m simulating then that implies that IT would have this idea too . . . doesn’t it?

October 25, 2015 @ 4:46 pm

Thank you for the explanation, Clive – makes a lot of sense. I had a sneaky suspicion you were a master hacker! At this point in time I am ready to strangle the originator that’s causing my simulated misery, let alone team up with her so she could understand me and presumably herself!!! You know that other common new age saying – “we as soul are god experiencing itself and expanding.” mmmmm? I hadn’t read the exercise page, but will definitely follow the link – thank you. Look forward to the new pages on memory and visual implants.

February 23, 2016 @ 9:46 am

Personal example of “presentiment”: Two Saturdays ago, while my sibling and I travelled from our home to the local hot air balloon festival, I kept feeling that something “bad” or “inconvenient” would happen to us until we reached our intended hotel. The receptionist said that the hotel was fully booked even though my sibling had booked the room the week before our overnight trip. Only then did the anxiety disappear. However, instead of feeling panicky over having no place to say, I felt oddly relaxed. This feeling made sense when we managed to check in at a neighbouring hotel after lunch — the very same one that our mom had asked us to check in a few days ago.

March 19, 2021 @ 7:14 pm

I do find that there is an issue with time lag in my life. I get knowledge about information before it is explained to me sometimes. Also after i signed up for the automated healing I started having weird deja vu moments which could possibly be from split versions of me going through the same scenario but just at different timelines

March 22, 2021 @ 1:51 pm

Mmmm, (EVERYONE READ!!!) actually Han (with respect to your recent comments) I’ve wondered if you are a split/duplicate of me. As I strongly suspect that you are, then you might find it useful to read this series of pages (starting here), although read all the below first.

I basically had a very bad and debilitating stammer/speech block such that when I got my first job (medical research support scientist) I decided that I just had to get rid of this as it was too limiting. I then spent time figuring out how to do this. Bottom line, I’d been extremely traumatised as a baby/toddler/young child specifically when I was starting to try to ‘speak’, although I was also traumatised in Junior and Senior school due to bully’s bulling efforts, kids ‘TAKING THE PISS’. So, I had trauma that caused a bad stammer that then also resulted in over a decade and a half of often daily traumatizing incidents (these only stopped in 6th form at school and also during my time at university.

So, my times as a baby/toddler resulted in a stammer, I then had bullying shit due to the stammer in both junior and senior school (contributing to yet more trauma). So, I basically had regular almost daily/weekly traumatizing incidents that then all contributed to the resulting stammer/speech block.

So, the above linked article series describes myself spending time figuring out how to ‘turn around’ the stammer, which took 5/6 years of ALL THE TIME/DAILY EFFORT to shift it from a 95% stammering/failure rate to the opposite.

However, it took another 3 to 4 years for me to become aware of the extreme base abdomen trauma which was all locked up/contained within my base abdomen/stomach area. i.e. it was this accumulated/locked abdomen trauma that was actually sabotaging me from breathing normally and freely. Coincidentally, ‘breath/breathing’ is the driving force/engine of speech!!! If you take notice of your body when you speak, then you eventually realise that you only speak when you breathe out, and you can only speak properly if your breathing outflow is smooth and also ‘uninterrupted’ (say by extreme abdominal focused trauma).

So, as a smooth outflow of air is needed for you to ‘SPEAK’ normally/fluently then having these body mechanisms locked/dysfunctional results in the stammer. I decided to get rid of the stammer and speech block about 6 months after I got my first job, I then spent quite a bit of time figuring out how to do this!!!!

In all, it took me about 9 years to turn around a very, very bad Cc c cC Cc C lLIVE stammer (i.e. to eliminate it COMPLETELY/ABSOLUTELY).

So, one of the bottom lines related to the above, is that ‘IF’ your current issues/shit are the result of past traumatizing circumstances that say went on for weeks, months or even years (in my case I had the stammer for 2 decades before deciding I ‘HAD’ to get rid of it) perhaps inclusive of regular or semiregular bullying and or other traumatizing incidents/experiences (because you’ve a stammer) then it can literally take you years before you even start to figure out the whole scale/range of the full underlying causes never mind then of how to deal with it all too, never mind then actually put in the work/effort to do this?!?!?

I decided to get rid of the stammer when I was 21 and a half. I developed my own program to do this, and it took me until the last few months of my 29th year before I got access to the underlying extreme abdomen trauma.

If you read the above linked page and then those that follow this then you’ll become aware that I had an email from someone basically calling me a liar?!?!?! On having an e-mail exchange with this ‘idiot’ I become aware that he’s a speech therapist (has been for a decade or two) and as a speech therapist, he’s apparently also got a ‘stutter’ and still has this after decades of being a speech therapist?!?!

The mind boggles doesn’t it!?!?!?! I personally manage to figure out and get rid of my own bad stammer, speech block, BUT a speech therapist not only cannot do that BUT, calls me a liar too?!?!?!

Apparently no speech therapist has managed to do what I did, which was to figure out the direct link between your abdominal breathing mechanism and SPEECH, such that if your abdominal breathing mechanism has been traumatised, then it is this that results in the presentation of a stammer, i.e. any past abdomen impacting trauma related to speaking/speech then directly interrupts the normal outflow of breath causing your speech/speaking to be blocked i.e. continually interrupted, resulting in a SSSSS ST STAAAAAAMMER!!!

BOTTOM LINE, past trauma/traumatizing experiences that were regularly experienced, because they were due to repeating incidents and or circumstances and or ‘ailments’ (a stammer) that happened again and again will result in an accumulation of trauma which will also have an accumulative impact on yourself. There is no quick way of dealing with these ‘accumulated over time’ types of trauma

March 23, 2021 @ 4:50 am

Hi clive! I did not expect such a response honestly about me being a duplicate of you. I guess thats why we were placed halfway around the world from each other ( Im in singapore) and why I felt like I was a man in the past (if your assumption is correct). I didnt exactly sense your timeline more of like parallel versions of xin ying’s and their revelations. I think there is a xin ying that goes through what I go through a day later than me and a xin ying that goes through her life days ahead of me which is why sometimes I get information just before it is explained to me as I have already ‘been through’ those scenarios already. In terms of debilitating scenarios you are right! I dont have a stammer (as far as I know but will pay more attention to myself to see if it happens) but more of a lack of self esteem and meekness due to constant bullying during my childhood and I do seem to have a fear of commitment which could have stemmed from sexual abuse. This is crazy but I have never experienced physical sexual abuse in my life before but it pops up in dreams like a recurring theme recently which I am unable to trace back the origin of. I will continue reading the articles you suggested and thank you for your help!

Cheers!

March 23, 2021 @ 3:01 pm

I’ve noticed (over many years) a lot of versions of ‘us’, some in very decent states/circumstances others in variations of bad shit and some in unbelievably bad shit (at least one so bad I think they died (despite being relatively young)). It’s all part of the objectives of the ‘base experiment’ we are living within, particularly with respect to having a vast scale of variations of life circumstances and none to massive shit/health problems (I’m 62 now and I only have very minor health issues), plus, none all the way through to large/massive scale thinking/knowledge acquisition and anomaly evaluating abilities/skills too.

Re sex shit, it’s possible it’s related to past lives, I only started to understand some issues (for example: rising anguish and despair with no ‘this life’ origins in my mid 30’s) by trying to access my past, which eventually had me gaining access to past lives and more so my original forms experiences (the person we are a duplicate or split of somewhere down it’s timeline).

March 23, 2021 @ 3:03 pm

Update: I was talking with friends today. You were right I just realised it too. I was a bit short of breath when I was talking to one of them and I found myself stuttering a bit. I did catch myself stutter a bit today when interacting whenever there is a need to present to people my ideas. I also have this issue whereby somethings sounds perfectly fine in my head and my way with words sabotage me from communicating that idea to people.

March 23, 2021 @ 5:39 pm

Oh that does make sense as I had done tarot before and apparently my most recent past life was rough! I was apparently extremely poor and there were a lot of similarities in that past life which I relate to now with the rough childhood (bullying, abuse by parents esp mom). I guess I could be on the better end of the spectrum and its honestly so horrible that these presumably split versions of us had to go through these kinds of horrible shit, it makes me angry. Do let me know if there is anything else I can do to help besides doing the exercises. P.S I have also sent you an email regarding the absent healing service and how it has affected me so far just letting you know in case you have problems receiving the email as it proved to be extremely difficult to send the email to you with the usual glitches and loading problems. Cheers!